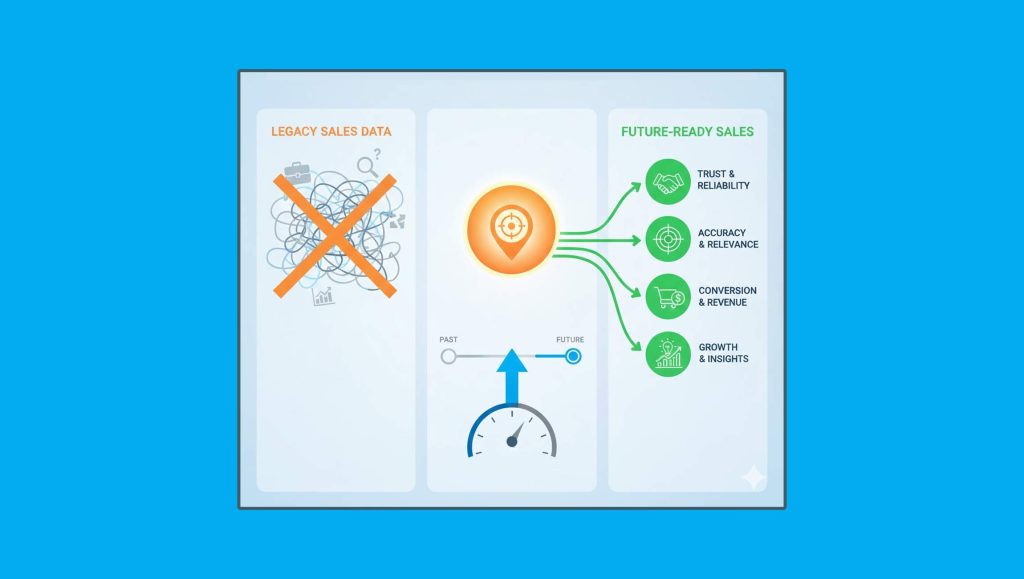

In this catch-up with SalesTechStar, Vaidya J.R., Senior Vice President and Global Head of Data and AI Business at Hexaware shares a few thoughts on the growing benefits and impact of AutoML in today’s B2B-Tech marketplace: _______ Thanks for having me here! I am deeply passionate about technology and business. I love to bring the two together in a strategic manner. I am fortunate to be achieving exactly that in my current role as the SVP and Global Head – BI, Big Data, AI, and Analytics of Hexaware Technologies. Leading the Data and AI business, I take great pride in building, guiding, and motivating our immensely talented team of experts in- designing, creating, and deploying – powerful transformational Data, Analytics and AI solutions and services. In my 25-plus years’ experience, I have been afforded the opportunity to work with a gamut of technologies (from PaaS to Cloud to Data & Analytics to IoT). I have been fortunate to provide thought leadership and develop strategies for several industries and create impact in different roles (from consulting organizations to building them, and from product management to delivery management, across geographies). It has afforded me the opportunity to lead the latest Data & AI technology transformations – both for Hexaware and our clients. And I strive to give my best to both. As an analytics evangelist and strategic change catalyst, I feel lucky and empowered employing Hexaware’s unique transformational solutions like AMAZE for Data & AI to our diverse client base that spans across dozens of industries and geographies. The ultimate reward is when our teams deliver results and value to enterprises across the globe thus fulfilling our purpose statement at Hexaware: “Creating smiles through great people and technology.” Read More: SalesTechStar Interview With Ryan Johnson, Chief Product Officer At CallRail The DataRobot and Hexaware partnership enables institutions to break through the barrier of low deployment success rate with the powerful capabilities of the DataRobot AI Cloud. The partnership is a coherent union of Hexaware’s data science expertise in open-source statistical tools, NLP, NLG and a fast-paced AutoML platform that drastically accelerates ML model building and deployment. The partnership promises ML solutions to be “2x faster in data preparation and 5x faster in AI deployment.” Thus, leveraging data expertise and proficiency to productionize AI at scale in record time. As part of the partnership, we have been working on multiple verticalized offerings. One such offering is a KYC (Know Your Customer) process for the banking industry that leverages Artificial Intelligence to help financial services businesses meet strict regulatory KYC standards. The exit out of the pandemic will be through digital transformation. The world had been witnessing a boom in data for a while now and the pandemic has only accelerated this further. The world will inevitably need smarter and more efficient ways to store and manage the data with cloud systems. In addition, there is the growing opportunity to utilize and make sense of this data through growth of AI. The above digital transformation will be enabled by speedy adoption to cloud systems and AI driven platforms. However, there are some universal challenges that we have observed to cloud and AI adoptions. Some of the common critical questions that I usually get asked across our engagements are: There is clearly more to AI platform and cloud adoption than what meets the eye. Moreover, there exists no ‘one-size-fits-all’ AI solution. Every industry has different data, different business problems they want to solve in different contextual realities. It is here that trusted and experienced technology partners like us can deliver immense value. In fact, particularly our latest AutoML platforms can be impactful to drive business value. With the times of black box AI behind us – we have developed technology and platforms that adopt an explainable and open approach to AI – which is exactly the ‘democratic’ AutoML platforms we offer here at Hexaware. I strongly believe that the process to initiate or even optimize the AI adoption can vary significantly from one industry to another, but the fundamental blocks will essentially be the same. The following steps are relevant in my view: Read More: SalesTechStar Interview With Saumil Mehta, General Manager: Square Point Of Sale I would like to share an important lesson learned from our various engagements in building and deploying models at scale for our customers across industries. The first thing I would like to mention is that you cannot just throw a data scientist at a problem and expect it to be solved; it takes a lot more than that. The entire community needs to come together. You need your Statisticians, Data Engineers, ML Ops Engineers, Business Analysts, Cloud Architects, Domain Specialists, Visualization specialist, even the customer representatives, to solve a problem effectively. This is exactly what I call the community as Decision Sciences Community that I have formed and nurtured to scale to solve problems across industries. This also ensures a human centric design and approach towards AI. Implementing a smart automation will help you enhance your entire AI value chain, right from exploratory data analysis to model deployment. AutoML platforms can help you optimize the exploratory data analysis, feature engineering, identification of suitable models, deployment, model explain-ability, monitoring and retraining. As you know, there are established processes ML model building like CRISP-DM and SEMMA which mandates the process steps for any AI/ML model building in a sequential fashion right from Business Understanding, data understanding, data preparation, model building, evaluation, and a feedback loop to Business understanding. We know how AI is rapidly transforming the way we all work and live. We are calling data the new ‘oil.’ But what’s the point of having all the data if we can’t put it to effective use! So, what we need is an ‘engine’ that consumes the data and ‘powers’ the systems. That ‘engine’ is the AI and ML. In this context, I’d like to share 5 prominent trends that we are observing, amongst others, when it comes to leveraging AI & ML: The good news is that – we at Hexaware, are continuously monitoring the latest trends and actively preparing towards bringing the above to our customers. We are sure that AI promises some truly exciting times ahead for all of us! Enterprises are creating huge data today and they will continue accumulating the same in future. Very soon we will have zettabytes and yottabytes of data in all forms, shapes and sizes – with every single enterprise. The biggest challenge for these enterprises will be – how can they effectively put these humongous data to effective use and how to drive business outcomes from them. In an environment where data explosion is already hitting us hard, it is not feasible to rely on manual crunching of data, model building or deployment. One can thus imagine the huge impact AutoML can make; it will be a game changer! The most prominent impact of AutoML will be that – Data Science will no longer remain the domain of an elite few in the organization. We will have the ability of developing and deploying model at a scale by the ‘common masses’ – with everyone being able to use data to drive business impacts. We call this the rise of ‘Citizen Data Scientists’. With AutoML, soon everybody in the organization will be Data Scientists in their own right! Hexaware is a fast-growing automation-led next-generation service provider delivering excellence in IT, BPO and Consulting services. Vaidya J.R., is Senior Vice President and Global Head of Data and AI Business at Hexaware Hi Vaidya, welcome to this SalesTechStar chat! Tell us about yourself and your role at Hexaware?

We’d love to hear more about Hexaware’s recent partnership with DataRobot and how this impacts your core offering and end users?

How have you been observing the need and growth of AI driven platforms and cloud systems in the industry and what in your view are some of the base level challenges industries still face when implementing new systems?

How in your view can industries enhance/optimize the use and adoption of AI to power overall production?

For industries looking to build out their AI capabilities, what kind of teams, structures and processes can come handy in ensuring fair deployment and optimized efforts across levels and functions?

A few thoughts on the future of AI?

Let’s take an example. Imagine you are a retailer that sells thousands of products across the globe. You want to do a forecast of every item. It’s impossible to achieve that via manual data models, which at best helps in aggregate category planning! But AutoML models can help you forecast at individual item levels. That’s the power AutoML holds!

Some last thoughts and takeaways before we wrap up?

Missed The Latest Episode of The SalesStar Podcast? Have a quick listen here!

Episode 111: Driving Better Productivity within your Product Team: with Kristina Simkins, VP of Product at Lessonly by Seismic

Episode 110: Driving Sales-Marketing Unity with Chetan Chaudhary, Chief Revenue Officer at Scale AI

Episode 109: B2B Revenue Generation Tactics with Michelle Pietsch, VP of Revenue at Dooly.ai