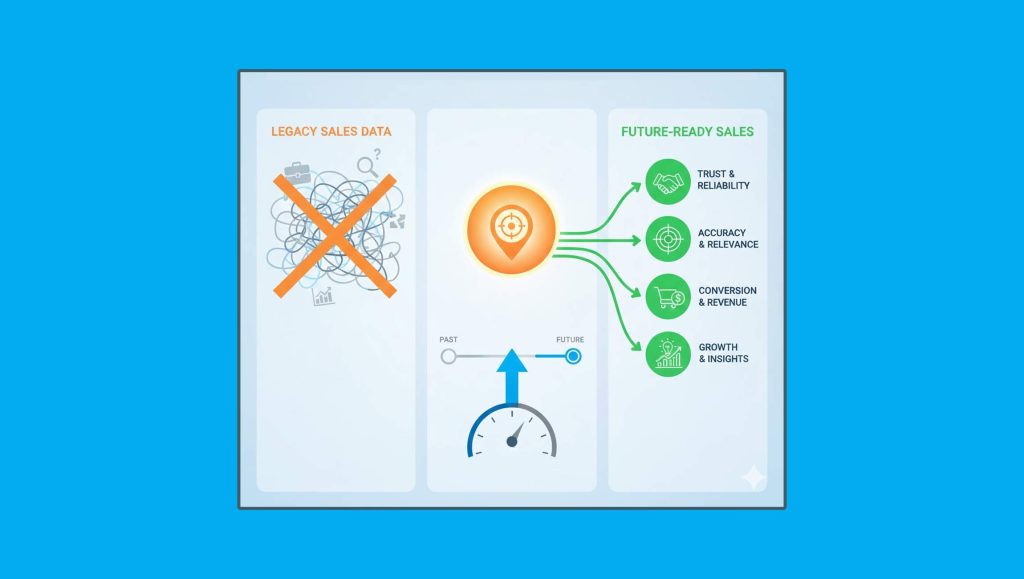

New innovation enables organizations to significantly accelerate actionable insight from video, audio and text-based data sources

Veritone, the creator of the world’s first operating system for artificial intelligence (AI), aiWARE™, announced it now supports the NVIDIA CUDA platform, enabling organizations across the public and private sectors to run intensive AI and machine learning (ML) tasks on NVIDIA GPUs, whether on-premises or in the Microsoft Azure and Amazon Web Services (AWS) clouds.

Read More: SOPHOLA, Inc Expands AdScale Enterprise Digital Ad Optimisation Platform To Indonesia Through…

“We built aiWARE to uncover insights from video, audio and text data, at scale, in near real-time. Supporting the CUDA platform advances that mission.”

This Veritone innovation unlocks new performance levels for organizations using aiWARE, Veritone’s proprietary OS for AI, as they can now process massive amounts of video, audio and text dramatically faster and more accurately by using the parallel-processing computational power of the newest generation of NVIDIA GPUs.

The NVIDIA CUDA parallel computing platform and programming model enables dramatic increases in computing performance by harnessing the power of NVIDIA GPUs, which can process substantially more concurrent tasks than a central processing unit (CPU).

Read More: SalesTechStar Interview with Zack Brown, Head of Sales at Write Label

By taking advantage of the latest CUDA-compatible version of aiWARE running in the Azure and AWS clouds, organizations can leverage GPU auto-scaling to handle more demanding workloads than ever before, seamlessly scaling GPUs in the cloud, whenever faster results are needed.

“The marriage of aiWARE and NVIDIA CUDA helps organizations realize artificial intelligence and machine learning solutions that can process vast amounts of data at unparalleled speeds,” said Veritone Founder and CEO Chad Steelberg. “We built aiWARE to uncover insights from video, audio and text data, at scale, in near real-time. Supporting the CUDA platform advances that mission.”