Deloitte’s recent AI in the Enterprise, 3rd Edition study of enterprise AI adopters found that 95% of respondents have concerns about ethical risks of the technology. Further, more than 56% of study respondents agree that their organization is slowing adoption of AI technologies because of emerging risks. To help companies proactively address AI ethics and integrity, the Deloitte AI Institute announced its Trustworthy AI™ framework. The framework aims to guide organizations on how to apply AI responsibly and ethically within their businesses.

Read More: SalesTechStar Interview With Dana Attar, Vice President Of Products At Tikal Center

“C-suite executives and boards must ask tough questions about ethical use of technology and provide active governance to safeguard their organization’s reputation and preserve the trust of internal and external stakeholders,” said Irfan Saif, AI co-leader, Deloitte & Touche LLP. “Organizations must demonstrate readiness to manage the new breed of risk that comes with human-machine collaboration. Our Trustworthy AI framework provides a common language to help organizations develop the appropriate safeguards and use AI in an ethical manner.”

Deloitte’s Trustworthy AI framework introduces six dimensions for organizations to consider when designing, developing, deploying and operating AI systems. The framework helps manage common risks and challenges related to AI ethics and governance, including:

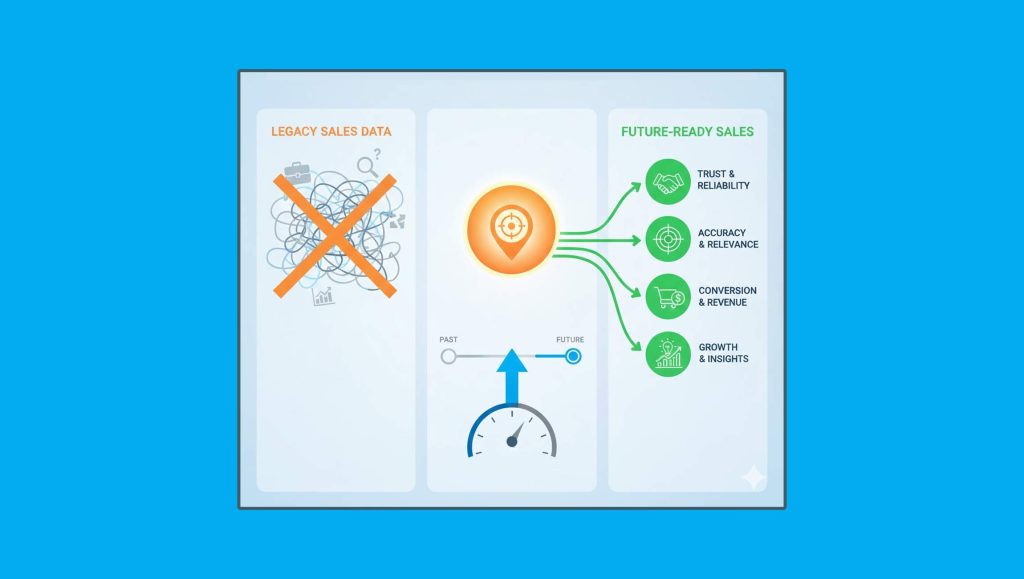

1) Fair and impartial use checks: A common concern about AI is how to circumvent bias introduced by humans during coding processes. To avoid this, companies need to determine what constitutes fairness and actively identify biases within their algorithms and data and implement controls to avoid unexpected outcomes.

2) Implementing transparency and explainable AI: For AI to be trustworthy, all participants have a right to understand how their data is being used and how the AI system is making decisions. Organizations should be prepared to build algorithms, attributes and correlations open to inspection.

3) Responsibility and accountability: Trustworthy AI systems need to include policies that clearly establish who is responsible and accountable for their output. This issue epitomizes the uncharted aspect of AI: Is it the responsibility of the developer, tester, or product manager? Is it the machine learning engineer, who understands the inner workings? Or does the ultimate responsibility go higher up the ladder — to the CIO or CEO, who might have to testify before a government body?

4) Putting proper security in place: To be trustworthy, AI must be protected from risks, including cybersecurity risks, that could lead to physical and/or digital harm. Companies need to thoroughly consider and address all kinds of risks and then communicate those risks to users.

Read More: Tips To Maximise Online Sales For Ecommerce Businesses During Downtime

5) Monitoring for reliability: For AI to achieve widespread adoption, it must be as robust and reliable as the traditional systems, processes and people it is augmenting. Companies need to ensure their AI algorithms produce the expected results for each new data set. They also need established processes for handling issues and inconsistencies if they arise.

6) Safeguarding privacy: Trustworthy AI must comply with data regulations and only use data for its stated and agreed-upon purposes. Organizations should ensure that consumer privacy is respected, customer data is not leveraged beyond its intended and stated use, and consumers can opt-in and out of sharing their data.

The Trustworthy AI framework also aims to help businesses increase brand equity and trust, which can lead to new customers, employee retention and more customers opting in to share data. Other potential benefits include increased revenue and reduced costs through more accurate decision-making due to better data sources and reduced legal and remediation costs.

“Having the appropriate measures in place to harness the power of AI while simultaneously managing risk can help organizations gain exponential benefits,” said Nitin Mittal, AI co-leader, Deloitte Consulting LLP. “The right safeguards not only assist in preserving trust, but also enable organizations to innovate, break boundaries, and drive better outcomes in the Age of With™.”

In addition to helping clients drive Trustworthy AI within their organizations, Deloitte practices the framework’s principles by infusing an ethical mindset within its own organization. This happens via internal development initiatives, including a ‘Tech Savvy’ program that engages practitioners in continuous learning of disruptive technologies and incorporating ethical AI into project review processes. Deloitte also engages external ethics experts and academic institutions to conduct well-rounded client conversations and advance thinking on AI ethics to ensure all perspectives are considered.

“Organizations ready to embrace AI must start by putting trust at the center,” said Beena Ammanath, Deloitte AI Institute executive director, Deloitte Consulting LLP. “We are devoted to not only helping our clients navigate AI ethics, but also in maintaining an ethical mindset within our own organization.”

Read More: Accenture Named A Leader And Star Performer In IT Security Services By Analyst Firm Everest Group