Introduces First Open Source Multi-Cloud Framework for Machine Learning Lifecycle; Simplifies Distributed Deep Learning; Delivers Data Reliability and Performance at Scale

Despite the allure of artificial intelligence (AI), most enterprises are struggling to succeed with AI. Preliminary findings of a Databricks commissioned research study reveals that 96 percent of organizations say data-related challenges are the most common obstacle when moving AI projects to production.

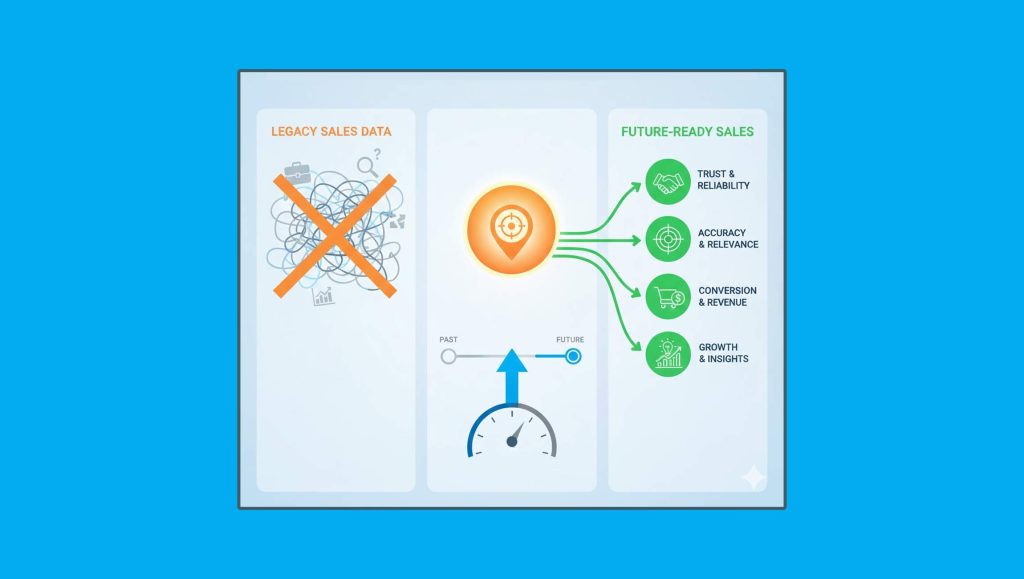

Data is the key to AI, but data and AI sit in technology and organizational silos.

Databricks, the leader in unified analytics and founded by the original creators of Apache Spark™, addresses this AI dilemma with the Unified Analytics Platform. Recently, at the Spark + AI Summit in San Francisco, an annual gathering of 4,000 data scientists, engineers and analytics leaders, Databricks launched new capabilities to lower the barrier for enterprises to innovate with AI. These new capabilities unify data and AI teams and technologies: MLflow for developing an end-to-end machine learning workflow, Databricks Runtime for ML to simplify distributed machine learning; and Databricks Delta for data reliability and performance at scale.

“MLflow is a end-to-end multi-cloud framework for developing machine learning applications in a repeatable manner while having the flexibility to deploy those applications reliably in production across multiple cloud environments.”

Watch the Spark + AI Summit 2018 keynotes live.

“To derive value from AI, enterprises are dependent on their existing data and ability to iteratively do machine learning on massive data sets. Today’s data engineers and data scientists use numerous, disconnected tools to accomplish this, including a zoo of machine learning frameworks,” said Ali Ghodsi, co-founder and CEO at Databricks.

Ali added, “Both organizational and technology silos create friction and slow down projects, becoming an impediment to the highly iterative nature of AI projects. Unified Analytics is the way to increase collaboration between data engineers and data scientists and unify data processing and AI technologies.”

“Competition for data science talent is so fierce because the potential for AI and deep learning to disrupt industries is now widely understood. To maximize the happiness of our data scientists we sought out managed cloud service providers that can deliver an on-demand, self-service experience and proactive, expert support,” said Justin J. Leto, Principal Big Data Architect at Bechtel, one of the most respected engineering, construction, and project management companies in the world.

Justin added, “Databricks’ Unified Analytics Platform provides our data scientists with usable data, and keeps our engineers focused on AI solutions in production instead of troubleshooting ops issues.”

MLflow: Improving Efficiency and Effectiveness of Machine Learning with End-to-End Workflow

Data is critical to both training and productionizing machine learning. But using machine learning in production is difficult because the development process is ad hoc, lacking tools to reproduce results, track experiments and manage models. To address this problem, Databricks introduces MLflow, an open source, a cross-cloud framework that can dramatically simplify the machine learning workflow.

With MLflow, organizations can package their code for reproducible runs, execute and compare hundreds of parallel experiments, leverage any hardware or software platform, and deploy models to production on a variety of serving platforms. MLflow integrates with Apache Spark, SciKit-Learn, TensorFlow and other open source machine learning frameworks.

“When it comes to building a web or mobile application, organizations know how to do that because we’ve built toolkits, workflows, and reference architectures. But there is no framework for machine learning, which is forcing organizations to piece together point solutions and secure highly specialized skills to achieve AI,” said Matei Zaharia, co-founder and Chief Technologist at Databricks.

Matei added, “MLflow is an end-to-end multi-cloud framework for developing machine learning applications in a repeatable manner while having the flexibility to deploy those applications reliably in production across multiple cloud environments.”

Databricks Runtime for ML: Simplifying and Enabling Distributed Deep Learning

Deep Learning continues to grow in popularity with the use of natural language processing, image classification, and object detection. As a result, the increased data volumes enable organizations to build better models, while the data complexity requires increased training time. This conflict has led organizations to adopt distributed deep learning leveraging a variety of frameworks like Tensorflow, Keras, and Horovod, along with complexities of managing distributed computing.

Databricks Runtime for ML eliminates this complexity with pre-configured environments tightly integrated with the most popular machine learning frameworks, like Tensorflow, Keras, xgboost, and scikit-learn. Databricks also addresses the need to scale deep learning by introducing GPU support for both AWS and Microsoft Azure. Data scientists can now feed data sets to models, evaluate, and deploy cutting-edge AI models on one unified engine.

Databricks Delta: Simplifying Data Engineering

According to research commissioned by Databricks, it takes organizations more than seven months to bring AI projects to close with 50 percent of the time spent on data preparation.

Currently, organizations build their big data architectures using a variety of systems, which increases cost and operational complexity. Data engineers are struggling to simplify data management and provide clean, performant data to data scientists, ultimately, hindering the success of AI initiatives.

As a key component in Databricks’ Unified Analytics Platform, Delta extends Apache Spark to simplify data engineering by providing high performance at scale, data reliability through transactional integrity, and the low latency of streaming systems. With Delta, organizations are no longer required to make a tradeoff between storage system properties or spend their resources moving data across systems. Hundreds of applications can now reliably upload, query, and update data at massive scale and low cost, ultimately making datasets ready for machine learning.