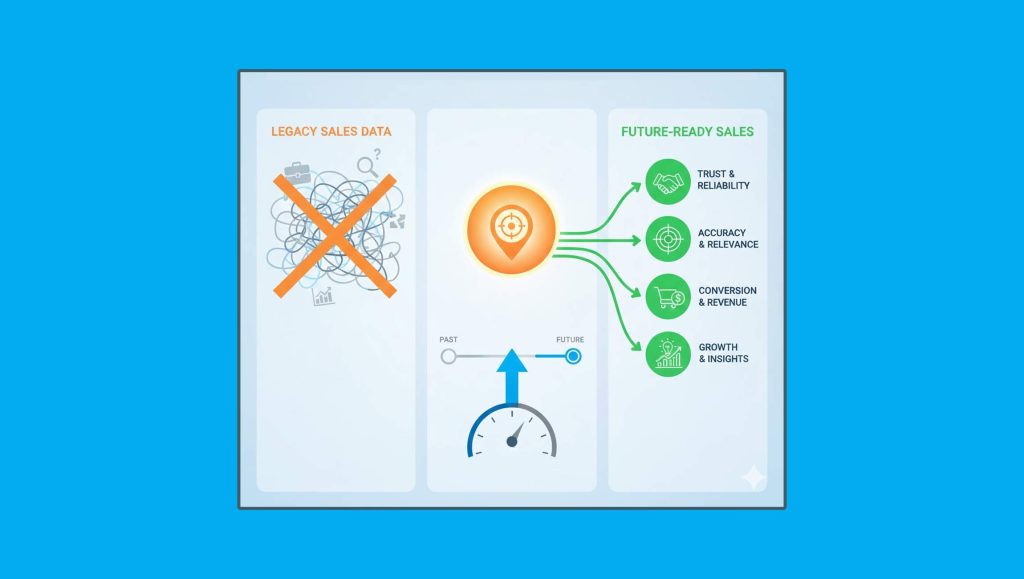

Confluent, the event streaming pioneer, announced the next stage of its Project Metamorphosis initiative, which aims to build the next- generation event streaming platform any organization can put at the heart of their business. As part of the Infinite release, Confluent announces infinite retention, a new capability in Confluent Cloud that creates a centralized platform for all current and historic event streams with limitless storage and retention. Organizations can now democratize access to event data and ensure all events are stored and accessible as long as needed. By combining all relevant past and current event data, organizations can build richer application experiences and make more informed data-driven decisions.

Read More: Popular Pays Announces Partner Program And Collaboration With Group RFZ

“Without the context of historical data, it’s difficult to take action on real-time events in an accurate, meaningful way”

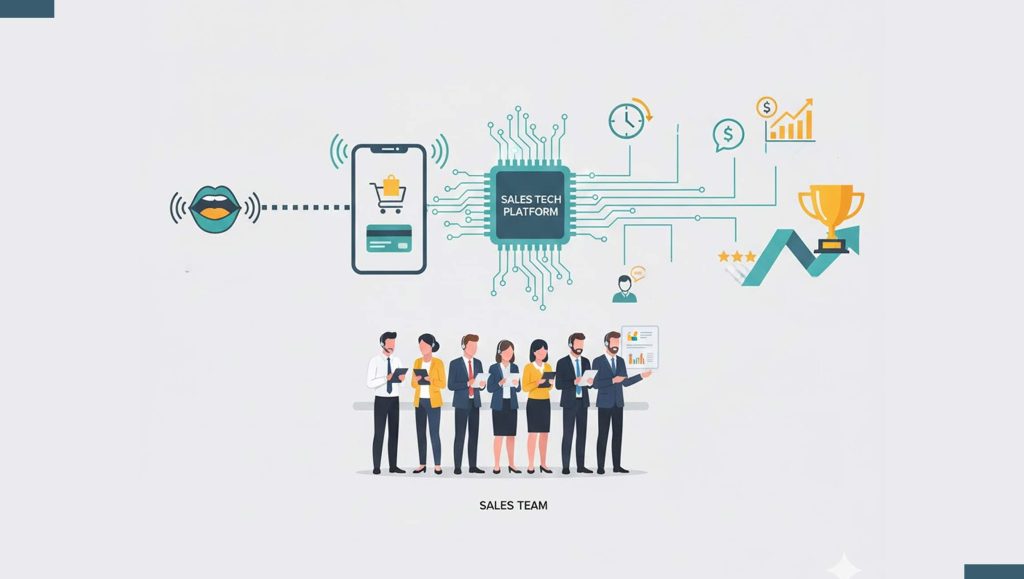

“Without the context of historical data, it’s difficult to take action on real-time events in an accurate, meaningful way,” said Jay Kreps, co-founder and CEO, Confluent. “We’ve removed the limitations of storing and retaining events in Apache Kafka with infinite retention in Confluent Cloud. With event streaming as a business’s central nervous system, applications can pull from an unlimited source of past and present data to quickly become smarter, faster, and more precise.”

Read More: Trax Moves Closer To Its Vision Of Digitizing Physical Retail With Qopius Acquisition

Contextually rich, personalized applications are in especially high demand as digital experiences have replaced in-person interactions during the pandemic. In order to build these sophisticated touch points, applications need input data on what is happening right now and how that relates to what happened in the past. This is incredibly challenging and prohibitively costly for existing data architectures, especially when new events move through organizations at gigabyte-per-second scale. And due to high storage costs and complexities in data balancing, events are typically retained in Apache Kafka for only seven days. This limits event streaming use cases, like year-over-year analysis and predictive machine learning, and is not often a long enough time for compliance reasons.

Read More: Rising E-Commerce Activities Will Be The Key Driver For Global Cyber Security Market Growth

Write in to psen@itechseries.com to learn more about our exclusive editorial packages and programs.