Enterprise leaders were promised that AI agents would automate large swaths of customer service. The reality is more sobering. MIT’s recent research shows 95% of generative AI systems do not make it to production, while many agent projects are paused or scrapped before they ever touch a live customer. In controlled office simulations, language model agents still miss multi-step tasks at an uncomfortable rate. None of this should surprise anyone who has tried to move from a demo to a dependable deployment. The five percent that are live and succeeding show the gap is not hype versus hope but discipline: translating impressive model capabilities into safe, reliable systems that improve customer experience at scale.

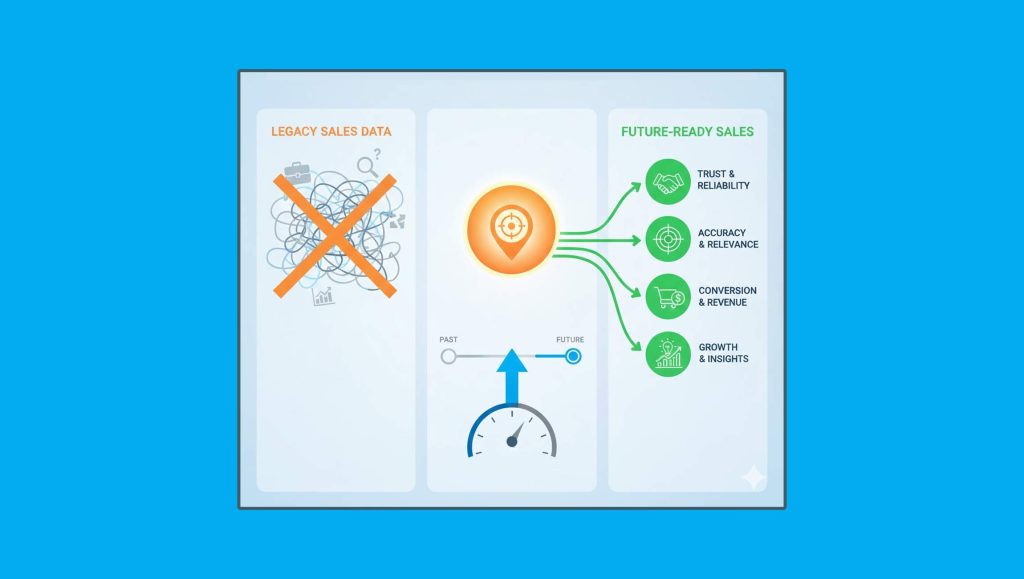

The root causes are consistent across organizations. Too many initiatives are launched to chase a trend rather than to solve a clearly defined customer problem. Too few are designed as systems that learn, adapt, and improve over time. Many organizations still treat this as a tool purchase rather than a strategic shift to future-proof the contact center. Governance, testing, and risk controls then arrive as afterthoughts. I call this the learning gap. Teams prove a concept in a sandbox, then struggle to harden it for production because they lack the operational scaffolding. If you want AI agents to succeed, you have to build for the messy reality of customer operations from day one.

Compounding the problem is a marketplace that rewards buzzwords. The term AI agent is being stretched to cover everything from deterministic chatbots to systems that can plan, call tools, and take actions autonomously. Some vendors even claim agents that never hallucinate. That tells you what you need to know. If a system never hallucinates, it likely is not using generative models for open-ended reasoning or language generation. There is nothing wrong with deterministic flows when the use case calls for them. The problem is labeling them as agents and implying the same capability envelope you would expect from a true agentic system. CX leaders deserve clarity because the safety posture, testing regime, staffing model, and expected outcomes differ wildly depending on which technology you are actually buying.

Testing is where many programs break. A handful of happy path prompts will never reveal how an agent behaves under realistic load, incomplete context, edge cases, or adversarial inputs. Production-ready agents require scenario design that mirrors real customer journeys, synthetic and historical data to stress decision points, continuous monitoring, and clear rollback procedures. Human-the-loop must mean more than a generic escalation. It should define how human experts supervise, correct, and improve generative ai agent performance without taking over every interaction, along with explicit thresholds for confidence and containment. The goal is not to eliminate risk, which is impossible, but to bound it and make it visible.

So how should CX leaders navigate the current market and move toward real outcomes rather than stalled pilots? Start by interrogating the vocabulary. Ask vendors to be specific about whether their solution is generative, deterministic, or a hybrid. Ask what agentic actually means in their product. Ask whether the system only retrieves knowledge or can take actions through APIs, ticketing, and commerce systems. If they mention human-in-the-loop, clarify whether humans supervise and guide autonomous behavior or simply catch failures at the end of a conversation. Precision in language will save months of misaligned expectations.

Next, require evidence that maps to production reality. Analyst coverage can indicate maturity, but the research cycle often lags fast-moving categories, so look for signs of operational depth. Public reference customers matter, yet many large enterprises cannot disclose details. In those cases, look for conference presentations, technical deep dives, or joint webinars where customers discuss the deployment in their own words. Case studies should cite measurable CX outcomes tied to live environments, not just promising internal tests. You are looking for proof that the vendor can connect to your stack, withstand your traffic, and meet your risk standards.

Engage shortlisted vendors early. A direct conversation quickly reveals whether you’re seeing a prototype or a hardened platform and brings security, compliance, and operations into the process before momentum stalls. Early engagement helps you calibrate internal effort, integration paths, ownership models, and the right level of automation for your first wave of use cases. Start small with Level 1 automation (straightforward, well-instrumented intents with tight confidence thresholds and clear human supervision) then graduate to deeper tool use and, only after proven safety and value, to full autonomy. Gate each step with evidence (containment targets, error budgets, CX impact) and explicit rollback so you’re building on wins rather than betting the farm.

Finally, insist on a proof of value that resembles the real world. A meaningful POV validates the core claims, exposes integration complexity, and clarifies the resources and timelines you will need to succeed. Free POVs can be useful for quick experiments but often limit scope and vendor commitment. Paid POVs require budget and approvals yet typically provide clearer milestones, deeper integration, and a more realistic environment. The point is not to pay for a pilot you do not need. It is to create the conditions where you can say with confidence that the agent can handle your priority use cases safely and repeatedly.

A small but growing cohort is already live at scale, and their pattern is consistent. They start with Level 1 automation on narrow, high-value intents, prove containment and CX impact, then graduate to tool use and partial autonomy behind clear safety gates. They run with policy, observability, SLOs and error budgets, and rollback plans from day one, and they treat agents like production systems: versioned, tested, change-managed, not demos

If you are a CX leader, this is the moment to press for clarity, proof, and discipline. Ask precise questions about technology and autonomy. Require production-grade evidence. Engage early to align stakeholders. Make the POV count. Do that and you will move from experiments that impress in a slide deck to systems that deliver real containment, faster resolution, and better customer experiences at scale. The path from five percent to something much larger is not about waiting for a breakthrough. It is about building the operating model that makes AI dependable for customers and for the people who serve them.

Read More: The Future of Customer Experience: Balancing AI Efficiency with Human Empathy