The new flagship product from Datameer upends a three-decade-old approach to data analytics

Datameer today announces the introduction of a breakthrough platform, Datameer Spotlight, that flips the traditional central data warehouse paradigm on its head and enables organizations to run analytics at scale in any environment and across data silos at a fraction of the cost.

Read More:Talend Achieves AWS Migration Competency Status And AWS Outposts Ready Designation

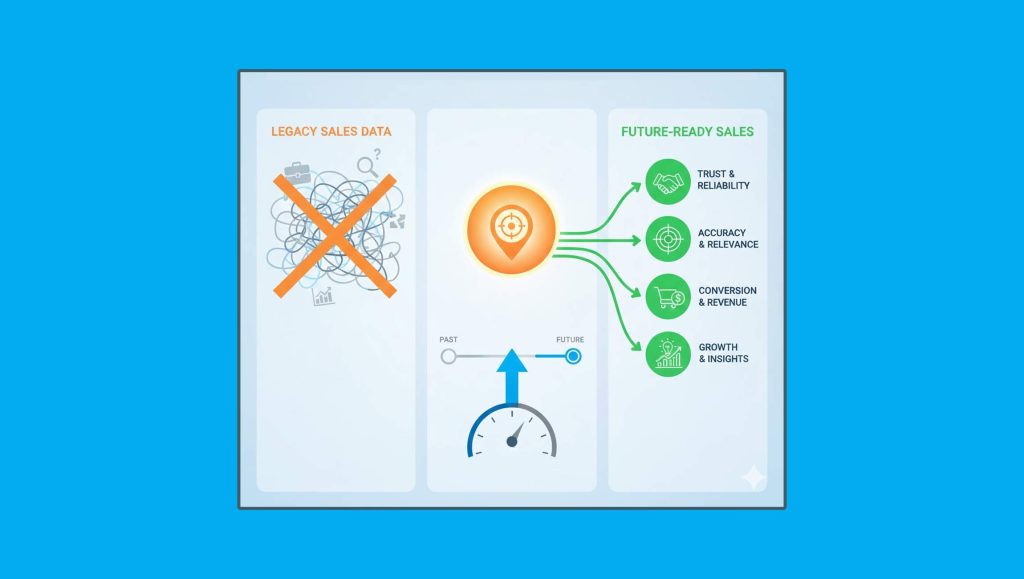

Today’s approach to leveraging data for analytics has remained largely unchanged for almost three decades: Organizations pipe all enterprise data into a centralized data warehouse or data lake in what is an expensive, time-consuming process.

Despite company after company failing at this elusive data centralization quest, leaving employees unable to easily find and access the data that’s relevant for their needs, companies such as Oracle, Teradata, and Informatica — then, later on, AWS, Google Cloud, Azure, Snowflake, Talend, and Fivetran — have thrived under this three-decade-old model.

Promoting the vision of a single source of truth that delivers a 360-degree view of the customer, vendors have been competing to store a copy of your data in their data centers using their tools. While the advent of the cloud data warehouse brought incremental improvements to organizations by saving them from needing to plan for excess storage and compute on-premises, it hasn’t changed the fundamental “duplicate and centrally store data” paradigm — despite the fact that this approach is unwieldy, costly and leaves enterprises struggling to leverage the full value of their data.

Costly & Wasteful

Data replication is not free. Whether on-premises or in the cloud, data replication requires storage, tools, and highly-skilled, highly-specialized data engineers to code and maintain complex ETL scripts. Unfortunately, demand for data engineers has grown 50%, and salaries have increased by 10% year over year, according to Dice.

It also has a non-negligible impact on the environment: nearly 10 million data centers have been built over the last decade, according to IDC. Now, data centers have the same carbon footprint as the entire aviation industry pre-pandemic.

Read More:Dynatrace Announces Expanded Partnership With SAP

Lengthy & Unwieldy

Business users need instant access to data to make real-time business decisions. Current batch ETL processes for moving data don’t give users the instant access they need. Making matters worse, it takes days, weeks, and sometimes months to initially set up a data pipeline. Data pipelines’ specifications can also get lost in translation between the business domain experts and the data engineers who build them, complicating things further.

What’s more, business users don’t always know what transformations, cleansing, and manipulation they’ll want to apply to the data, and having to go back and forth with data engineers makes the discovery process very cumbersome. Hadoop was designed to solve this issue with schema on read. But the complexity of the technology combined with the still monolithic data lake model doomed this ecosystem.

Governance & Security Risks

Replicating data via data pipelines comes with its own regulatory, compliance, and security risks. The centralized data approach gave IT teams the illusion of tighter control and data governance. However, this approach backfired. With data sets never exactly meeting business needs, different teams began to set up their own data marts, and the proliferation of these only exacerbated data governance issues.

Sunk Costs & Throwing Good Money after Bad

Over the years, organizations have made significant investments to build their version of the enterprise data warehouse. And despite these projects falling short of their promises, organizations have been committing what economists call the sunk cost fallacy by throwing more money at them, in an attempt to fix them, e.g., recruiting more specialized engineers and buying more tools vs. looking for alternative approaches and starting anew.

Enterprises will, for example, move some of their data to the cloud on AWS, Azure, Google, or Snowflake on the promise of faster, cheaper, more user-friendly analytics. Migration projects are rarely 100% successful and often result in more fragmented data architectures that make it harder to perform analytics in hybrid or multi-cloud environments. Businesses might purchase Alteryx, for example, to enable domain experts to transform data locally on their laptop, contributing to more data chaos and the proliferation of ungoverned data sets. After that, they’ll purchase a data catalog, to index that data and help business users find it. On top of that, the IT team will want to invest in tools to add a layer of governance for peace of mind. Data stacks often end up thrown together like the Winchester Mystery House, becoming a money pit for enterprises.

And yet despite these massive investments:

- 60% of executives aren’t very confident in their data and analytics insights (Forrester)

- 73% of business users’ analytical time is still spent searching, accessing, and prepping data (IDC)

- More than 60% of data in an enterprise is not used for analytics (Forrester)

Datameer solves these data challenges with its latest product Datameer Spotlight, a virtual semantic layer that embraces a distributed data model—also known as data mesh.

With over 200 connectors and counting, Datameer Spotlight provides business end-users virtual access to any type of on-prem or cloud data sources—including data warehouses, data lakes, and any applications—and lets them combine and create new virtual data sets specific to their needs via a visual interface (or a SQL code editor for more advanced users), with no need for data replication. The data is left in place at the source. This new approach solves for:

- Data governance: data remains at the source, and no data replication is needed.

- Cost: with no need for ETL tools, a central data warehouse, data cataloging, a data prep tool, data engineering, or middle man between the data and the end-user, the solution ends up at a fraction of the cost of traditional approaches.

- Speed and agility: connecting Datameer Spotlight to a new data source, takes as much time as entering your credentials to this data source. Once connected, business users can create new datasets across data sources in a few clicks.

- Data discoverability: it virtualizes your data landscape by indexing the metadata of every data source and creating a searchable inventory of assets that can easily be mined by analysts and data scientists—all without moving any data.

Read More:Square 9 Softworks® Introduces GlobalCapture Cloud Transformation Services